The Future of Artificial Intelligence in the EU: Impacts and Risks Across Industries

Artificial Intelligence has been in the spotlight of European Union institutions for several years now and as AI progresses, the community has to update their response plan to threats and opportunities technology comes with.

Since reaching a common decision on this subject is a bureaucratic process that involves talks between the Commission, the Parliament, and the Council, some EU member states decided to tackle the challenges individually with general or cross-sectorial measures that regulate AI in the limit of their borders. Two valid examples are Italy and Romania.

Italy’s ban on ChatGPT over data protection concerns, and later lifts the ban as more “clarifications” were added. At the same time, Romania expressed its intention to limit Artificial Intelligence over cybersecurity concerns. The bill also states that for breaking the law, citizens risk facing up to two years in jail.

To put more light on this topic, we contacted EU spokespersons representing 12 fields and three institutions: The Commission, the Parliament, and the Council. While at the moment of publishing this article, we didn’t get replies from Parliament, four European Commission spokespersons and heads of units representing key directions answered our questions regarding the future of AI in the European Union.

In the Spotlight:

-

Artificial Intelligence and Digital Economy, Research and Innovation in the EU

-

How AI will impact the education sector in the EU

-

Artificial Intelligence in the EU’s Justice Sector

-

The Use of AI in the Migration and Internal Security in the EU

-

The European Union Council’s Position on AI

-

The Future of ChatGPT and LLMs in the EU

Artificial Intelligence in the EU Digital Economy.

The first thing that comes to mind when we talk about the digital economy is online trading, digital goods, and everything in between. On this note, we got in touch with the European Commission’ Coordinating Spokesperson for Digital Economy, Research and Innovation, Mr. JOHANNES BAHRKE and put more light on how Artificial Intelligence is going to change this in the EU.

In their response, the Commission representative pointed out that AI comes with specific risks for some industries and sectors, and that EU’s goal is to make the AI a safe playground for the entire community. At the same time, the official shared two essential documents as references in the AI regulation process in the EU, stating that: “The new AI Act that we proposed in April 2021 will make sure that Europeans can trust what AI has to offer. Balanced, risk-based and future-proof rules will address the specific risks posed by AI systems and set the highest standard worldwide. In addition, the updated Coordinated Plan on AI puts forward key actions to promote EU’s global leadership on secure, trustworthy and human-centred Artificial Intelligence.”

However, Artificial Intelligence has progressed quite a lot between 2022 and June 2023, and this would have required newer or updated versions of the AI Act and Coordinated Plan on AI that are as of April 2021.

The ball is now in the hands of EU Parliament and the Council, as negotiations continue between these two institutions. However, before that, European Parliament’s press release on the new AI Act states that the final law draft should be approved between June 12-15, 2023. The negotiations are expected to be tough, as national, corporate and community’s interests and safety are at stake

Artificial Intelligence and Education in the European Union

In the education sector, Artificial Intelligence came as a two-sided coin having both benefits and disadvantages. Of course, this is applicable to other fields as well, but in education, this was extremely visible, especially starting in November 2022.

On the one hand, we have ai-powered feedback tools, automated processes, and fewer discriminatory opportunities in grading and evaluation, while on the other hand, there are generative ai tools, such as ChatGPT, Jasper, and more which were and likely will be used to generate the knowledge students should have learned, for example.

The European Commission is closely monitoring further developments on AI applicability in education. Georgi Dimitrov, Head of Unit, Digital Education mentioned in his answer for TechBehemoths that “As part of the Digital Education Action Plan, in 2022 the Commission updated the Digital Competences Framework 2.2, to include AI and data” In other words, the EU adopts AI in education, but of course, there are specific limitations. A good example here is Action 6 of the Digital Education Action Plan which refers to how exactly educators (and not only) should use AI in the education process. In its turn, the ethical guidelines come as an enhancement to the Digital Decade action plan that shapes the concept of skills and labor markets by 2030.

What it is still not clear from the answer, is what specific tools and variations of AI will be limited (at least in education), and also there is no direct answer on how the European Commission tackles generative AI threats and risks in the same sector - education.

However, what is definitely clear - the upcoming AI Act, which will play a decisive role in how AI tools at all levels and of all complexity will be regulated by the European Union as a result of negotiations between the Parliament and the Council.

Artificial Intelligence in EU Justice Sector

In the EU justice system, Artificial Intelligence already plays an important role in cost reduction, training and developing analysis capabilities. However, AI comes with risks as well, as the Commission representative states “Apart from the technical and operational hurdles which should be considered, there are concerns about transparency, accountability, and the potential for misuse”

On this note, the official refers to the AI Act that dates as of 2021, which amends the use of AI for the best outcome, including in the justice sector. The most interesting part in the document that the representative refers to is Title II, and more specifically, Prohibited Artificial Intelligence Practices which include the following:

-

Comercializing AI systems/technologies that have the potential or are intended to harm;

-

Comercializing AI systems/technologies that exploit vulnerabilities and have the potential to harm psychologically and physically

-

Placing on the market AI Systems by public authorities or on their behalf and serve identification purposes of a real person, based on behavior patterns

At the same time, the document states that “A Member State may decide to provide for the possibility to fully or partially authorise the use of ’real-time’ remote biometric identification systems in publicly accessible spaces for the purpose of law enforcement within the limits and under the conditions listed , That Member State shall lay down in its national law the necessary detailed rules for the request, issuance and exercise of, as well as supervision relating to, the authorisations referred to in paragraph 3.”

So, in other words, the EU already regulated the use of AI back in 2021, but with all this, the AI Act should be updated in light of new developments, that are currently not regulated, including in the justice system.

At the same time, the representatives mention that AI could be successfully implemented in the Judicial Training processes, and thus, optimize processes and help in case analysis. In this direction, the Commission performed a study back in 2020 (that, we consider that again should be updated), regarding the use of innovative technologies in the justice field.

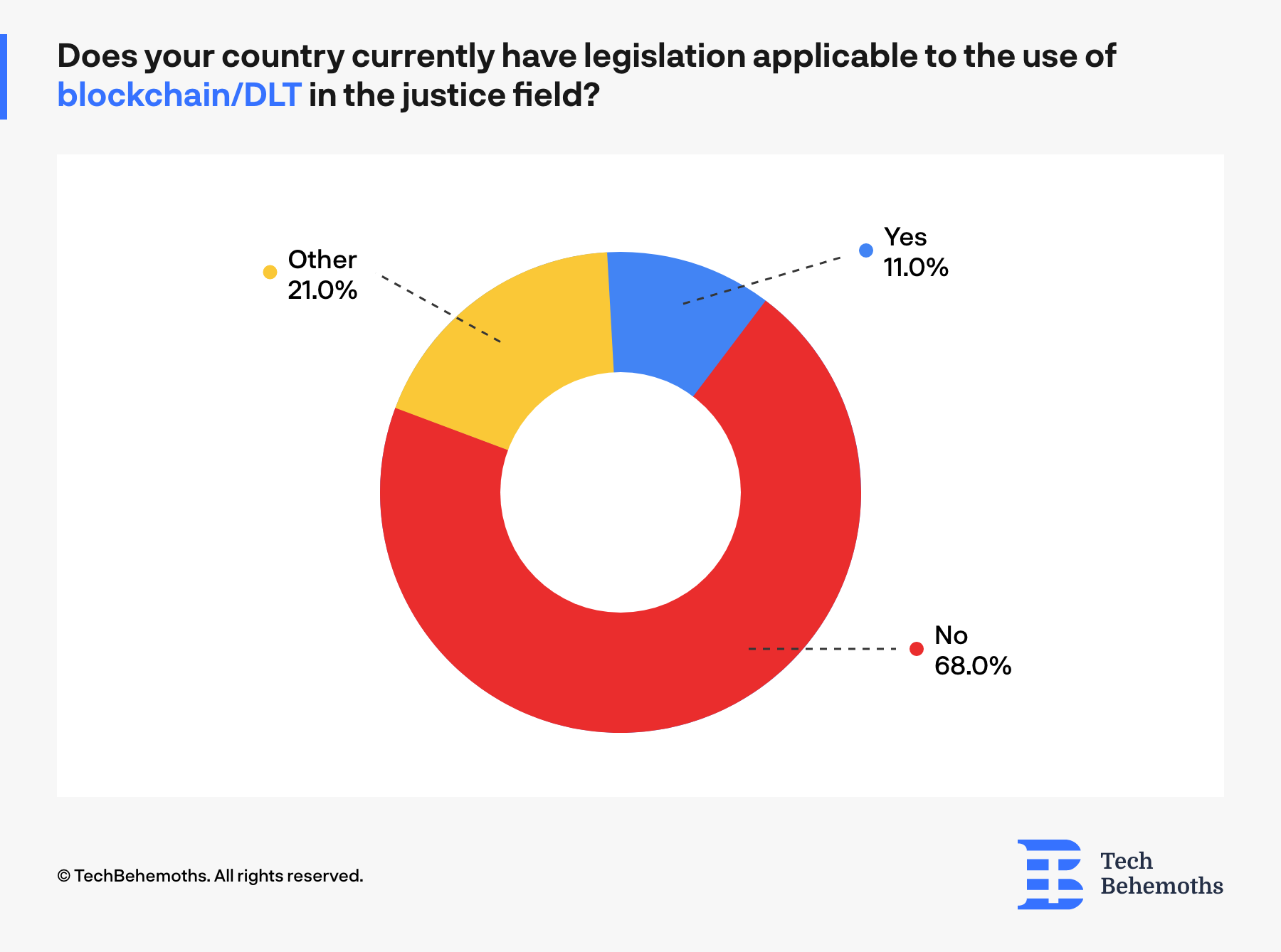

After taking a closer look at the study, we understand that the EU researched the possibility of implementing blockchain technologies in the judicial sector. The study shows that only 11% of the respondents declared that their member countries have legislation applicable to blockchain/DLT in the Justice field

Source: Study on the use of innovative technologies in the justice field

AI in the Migration and Internal Security in the EU

Regarding AI in EU Migration and Interla Security, we received an answer from Martin Übelhör

Deputy Head of Unit, Directorate-General for Migration and Home Affairs, Home Innovation & Security Research.

He states that “the co-legislators of the EU are currently negotiating the future EU AI Act as part of a broader strategy, and that “The legal boundaries for the use of AI tools will be set in the AI Act.”

As for the recommendations directed to EU-based IT companies that develop AI services, Martin Übelhör is to be aware of the forthcoming regulatory requirements and principles and assess themselves against them.

According to him, the list of EU-funded projects in this regard includes:

And also, funding opportunities for AI start-ups and SMEs:

On this note companies involved in or developing AI technologies and products, are invited to apply for these opportunities.

The European Union Council’s Position on AI

To our request on the EU Council’s Position on AI, the Public Information Service of the General Secretariat of the Council of the EU was dry and short, and included a disclaimer that says that their answer may not be regarded as constituting an official position of the Council.

The response included two links, one of which talks about the Council position as of December 2022 and the other link that points to the result on all AI-relevant topics and documents from the Council’s website.

The first statement includes general information, which looks rather like a summary of what the Commission representatives answered in detail.

On the second link, we identified an internal document, mentioned as PUBLIC, which casts more light on specific topics, including the Europol sandbox environment, Accountability Principles for Artificial Intelligence (AP4AI), and also - ChatGPT – The impact of Large Language Models (LLM) on Law Enforcement.

The Future of ChatGPT and LLM in the EU

Ever since Italy shut down and then allowed again ChatGPT, the debates on how to regulate LLM in the EU has become a hot topic. In just a couple of months, there have been observed multiple replies either online and not only, between EU officials and Sam Altman - the founder of OpenAI.

The last public declaration happened at the end of May 2023, Altman told Time that Open AI could leave Europe considering the new AI act that is currently being negotiated between the Council and the Parliament.

However, at the beginning of June 2023, Altman met with EU Commission President Ursula von der Layen, after a series of high-level discussions with French President - Emmanuel Macron, and British Prime Minister Rishi Sunak.

Before that, on May 8, 2023 an internal document classified already as public shows the result of a Europol Innovation Lab Workshop that mentions the following: The key findings suggest that LLMs such as ChatGPT can be abused by criminals for a number of crime areas, among them fraud and social engineering, disinformation and propaganda, as well as cybercrime and child sexual abuse, notably the online grooming of children. ChatGPT’s ability to draft highly authentic texts on the basis of a user prompt helps generate criminal content at speed and at scale. At the same time, these types of models can facilitate the production of malicious codes for the purpose of cyberattacks even if the criminal has little technical knowledge.

The results are indeed worrying and if it’s to believe them, LLMs including ChatGPT will perhaps be strongly regulated by the European Union, in an attempt to prevent cybercrimes, social engineering and child sex abuse among others.

However, there’s also a positive side, on how LLMs can help law enforcement agencies. The document also states that: LLMs may however also offer some benefits to law enforcement agencies. These include supporting officers investigating unfamiliar crime areas, facilitating open-source research and intelligence analysis, as well as the development of technical investigative tools. The use of LLMs by law enforcement would, however, require a secure environment and thorough assessments with regard to safeguarding fundamental rights and mitigating potential bias.

While there is no news yet regarding the fate of the new AI Act, it is very likely that in the EU, Artificial Intelligence and its use will be monitored and to some extent limited due to the risk that it poses for all sectors.