A Comprehensive History And Explanation of OpenAI’s DALL-E

Summary

OpenAI has rapidly transformed AI from text generation to creating stunning visual content with tools like DALL-E, DALL-E 2, and DALL-E 3.

This article explains how OpenAI’s AI innovations intersect with creativity, ethics, and regulation:

- AI-generated imagery – transforming textual messages into original visuals, pushing the boundaries of creativity.

- Ethical challenges – copyright, user consent, and fairness in training datasets remain critical concerns.

- Platform responses – DeviantArt, Shutterstock, and Getty Images present different strategies for integrating or restricting AI-generated art.

- Regulation and transparency – OpenAI, safeguards, and resilience with global AI regulations.

With a responsible approach and the development of strategic partnerships, artificial intelligence tools like DALL-E are reshaping the creative industry and defining the future of generative artificial intelligence.

In 2015, a small startup was created by the name of OpenAI. The state of A.I. manufacturing was not as much at the forefront of everyone’s mind as it is in 2025, and no one could have suspected the company’s meteoric rise. By now, OpenAI’s projects, including “ChatGPT” and DALL-E 3, are central to both creative and enterprise AI applications. They garnered as much fame as they did infamy, and they show no signs of stopping, much to the chagrin of both competitors and critics. In this article, we will explore one of OpenAI’s tools in particular, that being DALL-E and its second iteration. Starting back at the company’s beginning and giving an overview of the company’s biggest scandals and achievements along the way, in hopes of illustrating the excitement and mark of ornery, which have been visited upon the firm. So, let’s start!

A timeline of innovation

OpenAI launched in 2015, but the robust, opinionated media coverage and monitoring of the company’s activities would not start until 2019, when OpenAI first debuted GPT-2. GPT-2 was a simple text generator model, designed to give helpful predictions of what words a user might input next while writing. It comprised a rather small 1.5 billion parameters and was trained on a training data set of about 8 million web pages. By 2025, GPT has evolved to GPT-4.5, powering advanced multimodal applications used by over 200 million users worldwide.

The move was small, and a far cry from OpenAI’s later endeavors, but it was a sign of things to come, for when January of 2021 came along, the company was ready to start its conquest through the world of AI, launching “DALL-E” and “CLIP”, two companion AI. DALL-E, our subject of interest, is the first iteration of OpenAI’s multimodal, text-to-image generating AI, with its name a portmanteau comprised of the names of famous Surrealist painter Salvador Dali and Disney Pixar fans’ favorite junkyard robot, WALL-E. As of 2025, DALL-E 3 is the latest version, generating images at 8K resolution and reaching over 50 million monthly users, with enhanced safety and copyright filters. “Multimodal”? “Text-to-image”? A lot of big words there, so let’s unpack! DALL-E’s multimodality is simply a shorthand for its capabilities of text and image interpretation. Additionally, DALL-E can also take a text-based task, known as a “prompt”, which is fed to it by a user and generate an image from that, hence “text-to-image”. DALL-E works on 12 billion parameters and is a customized version of GPT-3, the next iteration of OpenAI’s GPT-2, which, unlike its predecessor, expands its use cases from the realm of text into the realm of the visual, as well.

Writing for “Interesting Engineering”, Brittney Grimes explains: “How does it produce the art? It uses the algorithm within the words and places them in a series of vectors or text-to-image embeddings. Then, the AI creates an original image from the generic representation it was presented with from its datasets, based on text added by the user creating the art.”

This custom GPT-3 model, much like its predecessor, requires training data, both textual and visual. Remember this for later, when we deep-dive into a few of the firm’s controversies.

CLIP, a neat shorthand for “Contrastive Language–Image Pre-training”, does the exact opposite of DALL-E; it interprets images, assigning a corresponding text to them. OpenAI used CLIP to check how well DALL-E was generating the images. If CLIP gave a close enough prompt to what was requested by the user, then the OpenAI team knew they were heading in the right direction. Put simply: If CLIP-generated text was accurate enough, then DALL-E’s image was good enough, too.

This would not be CLIP’s final soiree, however, as only a year later, on April 6, 2022, DALL-E 2 was revealed to the world. Where DALL-E’s images were met with interest, directly proportional to the amount of mockery, for their grotesque, primitive quality; DALL-E 2 boasted a quadrupled image resolution, a streamlined architecture of 3.5 billion parameters for image generation, with an equal 1.5 billion tasked with their enhancement, according to Grimes’ coverage. The enhanced model’s showcases looked impressive, and heads began to turn, minds began to churn, and the words “artificial intelligence” began echoing louder and louder from every corner of the digital market and scientific world. The pressure was building inside the speculative bubble of AI, and the world was eager to get its hands on the new model, some out of worry, others out of amazement and disbelief.

DALL-E 2 continued to be used widely through 2023–2024, and by early 2025, OpenAI released incremental updates improving image resolution and generation speed. DALL-E 3 is now in Beta testing, expected to quadruple DALL-E 2’s parameter scale for more realistic outputs.

The new model used CLIP again, this time directly at its core. Large image datasets were once again fed into DALL-E 2’s neural network, then CLIP was tasked with their textual and contextual interpretation into an “intermediate form”, with which the AI can work. A diffusion model would then take that intermediate form and iterate on it until the image was finished. Will Douglas Heaven, for the MIT Technology Review, writes: Diffusion models are trained on images that have been completely distorted with random pixels. They learn to convert these images back into their original form. In DALL-E 2, there are no existing images. So the diffusion model takes the random pixels and, guided by CLIP, converts them into a brand new image, created from scratch, that matches the text prompt.

This entire process, as simplified above, would be elucidated on April 13, 2022, when OpenAI published “Hierarchical Text-Conditional Image Generation with CLIP Latents”, a research paper detailing their new generation method.

DALL-E 2 was not yet ready; a private Beta, hidden behind a waiting list and accessible mostly to researchers, was all that stoked the fires of interest around OpenAI’s new and exciting venture. That was until September 28, 2022, when the Beta waiting list was removed. Only two months later (November 3), a public beta for “DALL-E API” followed, allowing for the integration of the AI into other people’s external apps and projects. Not all was as it seemed, however, as along with each of OpenAI’s new moves came another deluge of criticism. The public Beta of the DALL-E API has been widely expanded by 2025, enabling global integration into creative and commercial platforms. OpenAI has also increased server capacity and support for enterprise clients to handle the growing demand.

Mounting Critique of OpenAI’s Practices

In the section “Planning for AGI and beyond” on OpenAI’s website, they proudly state: “Our mission is to ensure that artificial general intelligence—AI systems that are generally smarter than humans—benefits all of humanity.” The development of such AI models has always been the company’s goal. On the purely technical side, Oren Etzioni, CEO of the Allen Institute for Artificial Intelligence (“AI2”), found himself cautious in his praise for DALL-E 2, saying: “This kind of improvement isn’t bringing us any closer to AGI, […] we already know that AI is remarkably capable at solving narrow tasks using deep learning. But it is still humans who formulate these tasks and give deep learning its marching orders.” Prafulla Dhariwal of OpenAI attempted to explain: “Building models like DALL-E 2 that connect vision and language is a crucial step in our larger goal of teaching machines to perceive the world the way humans do, and eventually developing AGI.” For added context, a deep learning algorithm is a multilayered neural network, a type of machine learning, which has the capability of a lot of automatic quasi-reasoning and learning, eliminating some of the human expert backend usually needed for AI endeavors up until this point.

OpenAI also admits the imperfections of its newest AI model. Alex Nichol, a researcher for OpenAI, recounted a humorous event for the Smithsonian Magazine, where, upon asking DALL-E 2 to “put the Eiffel Tower on the moon”, the moon instead simply floated above the Eiffel Tower, leaving natural order unchanged. A combination of two or more objects and attributes is often the seeming cause of many of these hiccups.

As of 2025, OpenAI continues to refine DALL-E 2 and DALL-E 3, reducing such errors in spatial reasoning. Similar humorous quirks still occur occasionally, but the system now handles complex prompts with far greater accuracy.

This focus on the technical, I must admit, belies the much larger ethical debacles at the forefront of OpenAI’s ventures. Antonia Mufrarech, writing for the Smithsonian Magazine and referencing an article on The Verge, explains: “Other than imperfections in its automation, DALL-E 2 also poses ethical questions. Although images created by the system have a watermark indicating the work was A.I.-generated, it could potentially be cropped out.”

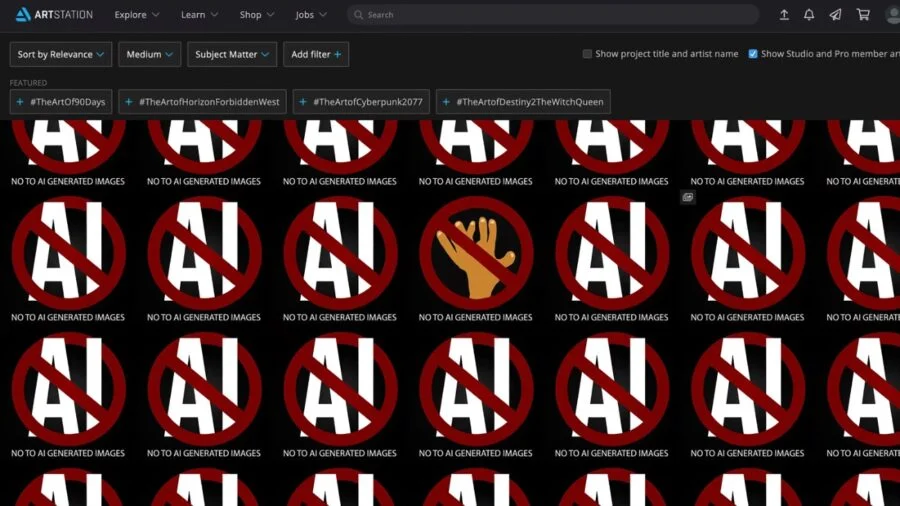

DALL-E, and art-generating artificial intelligence like it, also poses an issue for many artists, such as the many users of online art platform DeviantArt, who expressed vehement disapproval of the website’s launch and integration of new AI tool “DreamUP” on November 11, 2022. By 2025, DeviantArt and other platforms have updated policies requiring clearer opt-in/opt-out for AI training, following ongoing community feedback about unauthorized use of artists’ work. DreamUP, the tool, which, according to the company’s official announcement blog, allows users to “DreamUp almost anything [they] can imagine”, used training data gathered by LAION (Large-scale Artificial Intelligence Open Network) and was launched with all users automatically opted in to the service, meaning users’ creations on DeviantArt were automatically fed into the algorithm, unless the users chose to opt out. An update on the same announcement blog post now reads: We heard the community feedback, and now all deviations are automatically labeled as NOT authorized for use in AI datasets.

Users flooded the comment section directly under the blog post to give a platform to their disappointment, with one user noting: “Why do real artists have to share Deviantart with fakers who don't even put in the effort or practice?” And another writing passionately: “It has now been nine months since you launched this abomination. DA is flooded with AI trash. Your AI label, added more than a year too late as nothing more than a recommendation, is being ignored by lots of AI artists.

“Personally, I think you should be sued for false advertising every time you call DA an art website or a place for artists. You have turned an art website into a pathetic marketplace, killing the community here in the process. You have absolutely no respect for artists and their rights. You clearly do not care about the users' opinions and decisions. You – [DeviantArt] team – are despicable!” Others spoke with less eloquence and with words and phrases that might be better left out of this article.

The slew of bones, which artists have to pick with image-generating AI, could be the topic of an entirely different set of articles; unfortunately, OpenAI seems to let the backlash fall on deaf ears, as it trudges on steadily towards its stated AGI goal. This year, only a few months ago, OpenAI CEO Sam Altman went on record citing “many concerns” about new AI regulations being developed in the EU, which could potentially lead OpenAI to the drastic decision of leaving the EU entirely. Transparency seems to be a large issue, with Altman claiming that there are “technical barriers to what’s possible” and co-founder Ilya Sutskever expressing disapproval of OpenAI’s former methods of transparency. Sharing too much reportedly leads to copying by rival businesses. This is all according to The Verge.

Some of the legislation would also require OpenAI to release “summaries of copyrighted data used for training.” A potentially fruitful basis for catastrophic lawsuits, which could stifle the company’s growth and hinder future endeavors. OpenAI isn’t staying completely inactive; when it comes to safety precautions, however, including its code of conduct, which forbids the generation of various types of hurtful content, including “hate”, “self-harm”, “harassment”, “violence”, and images of a sexual nature. The policy also “encourages” users to disclose AI involvement in their work. The DALL-E creators have also allegedly “limited the ability for DALL·E 2 to generate violent, hate, or adult images. By removing the most explicit content from the training data, [and] minimizing DALL·E 2’s exposure to these concepts.” In an additional effort to curb the misuse of public figures’ likenesses, they have ”also used advanced techniques to prevent photorealistic generations of real individuals’ faces, including those of public figures.”

Conclusion

Will codes of conduct and slap-on-the-wrist “encouragements” be sufficient in order to stifle the work of bad actors? As shown by DeviantArt’s example, most likely not. OpenAI’s fear of regulation and its coy engagement with the topic are equally understandable, as they may be disappointing to some.

Some companies are also fighting back against the AI craze, such as Getty Images, which banned images created by image-generating AI, like DALL-E 2, from being hosted on its stock photo database. The CEO of Getty Images, Craig Peters, stated: “There are real concerns with respect to the copyright of outputs from these models and unaddressed rights issues with respect to the imagery, the image metadata, and those individuals contained within the imagery... We are being proactive for the benefit of our customers.” On the flipside, popular stock image website Shutterstock partnered with OpenAI on October 26, 2022, integrating DALL-E 2 into its service to generate AI-made stock images. A few months later, on July 11, 2023, the partnership between the two entities deepened, as Shutterstock announced a six-year partnership in order to provide training data from its service to OpenAI.

As of 2025, Shutterstock continues its partnership with OpenAI, now incorporating DALL-E 3 training datasets, while Getty Images has reinforced its AI content policies, extending them to all AI-generated works released post-2024.

According to Mack Degeurin, writing for Gizmodo, Shutterstock promised a “’Contributor Fund’ to compensate contributors whose Shutterstock images were used to help develop the tech. If successful, Shutterstock’s model could set the standard for human artist compensation in an AI art space that, until now, has largely been a Wild West of legal and financial ambiguity. In addition to the fund, Shutterstock said it aims to compensate contributors with royalties when DALL-E uses their work in its AI art.“

According to posters of the MicrostockGroup Forum, not all is as it seems, however, as the posters detailed low Contributor Fund payouts, difficult opt-out procedures (as users are, of course, automatically opted-in to the service, much like DeviantArt’s DreamUp functioned at first) and a lack of transparency in where to even look for the Contributor Fund’s measly payments. A user, going by “Mantis” on the forum, received an email in response to his inquiry for an opt-out, which read “our Tech Team will introduce a way for all contributors to opt out of having their content included in datasets. Until then, your patience is much appreciated.” and was sent by the Shutterstock Contributor Care Team. Another user aptly reacted: “It's amazing that something is made possible for buyers without any problems, but at the same time, it takes weeks for you to be able to undo it as a contributor. I can't see any appreciation of the contributors here. On the contrary.”

Some see dark clouds forming above the AI landscape inside of our global, digital marketing world, and others, like OpenAI itself, continue with excitement, a vivacious march forward, and into the uncertain future. Only time can tell what will become of OpenAI’s DALL-E, as well as the company’s future work, but either way, stagnation throughout the complex proceedings seems unlikely.

Update - September 2025

Will codes of conduct and "incentives" be enough to stifle bad actors in AI-generated content? Evidence from 2025 suggests that not entirely. DeviantArt, Shutterstock, and Getty Images continue to face backlash, copyright disputes, and concerns about the ethics of AI from society.

Shutterstock implemented clearer opt-in/opt-out policies for AI datasets in 2025, while Getty Images maintains a ban on AI-generated images created after 2024, prioritizing copyright protection. DALL-E 2 and DALL-E 3, proposed by OpenAI, are constantly updating their safeguards to limit the generation of harmful content and protect against copyright.

Of course, companies still face challenges related to regulatory gaps, transparency, user consent, and fair compensation, and even as regulators and the public demand stricter accountability, that hasn't stopped OpenAI and other AI developers from continuing to multiply and advance the possibilities of AI-powered creation.

What remains to be concluded is that the future of DALL-E art and AI-powered creativity in general remains as dynamic and uncertain as ever, where continuous innovation and adaptation seem inevitable, so stay tuned for changes.

Related Questions & Answers

How quickly has OpenAI grown its user base since launch?

What are the parameter sizes of DALL·E models, and why are they important?

How many people use DALL·E 3?

Do most artists view AI-generated art as unethical?

What sets DALL·E 3 apart from DALL·E 2?